ML-17: Supervised Learning Series — Conclusion and Roadmap

Complete overview of the Supervised Machine Learning blog series: algorithm comparison, decision flowchart for model selection, and recommended next steps for your ML journey.

Complete overview of the Supervised Machine Learning blog series: algorithm comparison, decision flowchart for model selection, and recommended next steps for your ML journey.

Master the Boosting paradigm: understand sequential ensemble learning, implement AdaBoost step-by-step with sample weight updates, and choose between Random Forest, AdaBoost, and Gradient Boosting.

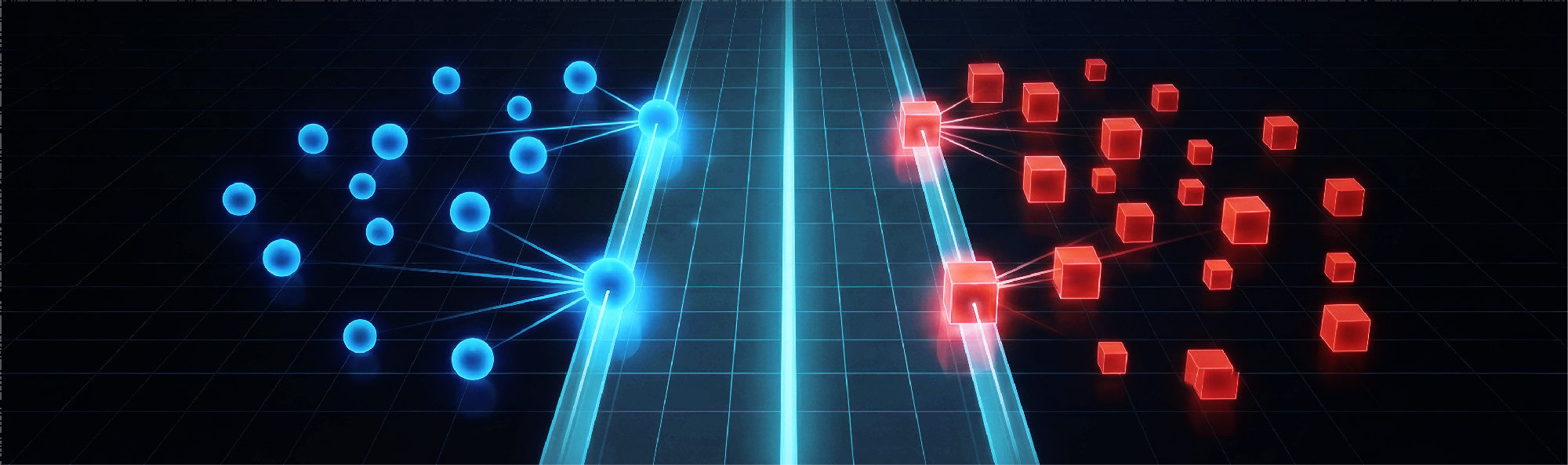

Master ensemble learning: understand why combining trees reduces variance, learn Bootstrap aggregating (Bagging), build Random Forests with feature randomization, and evaluate models using Out-of-Bag error.

Master decision tree classification: understand Gini impurity and entropy, learn the CART algorithm step-by-step, and apply pruning to build interpretable yet powerful models.

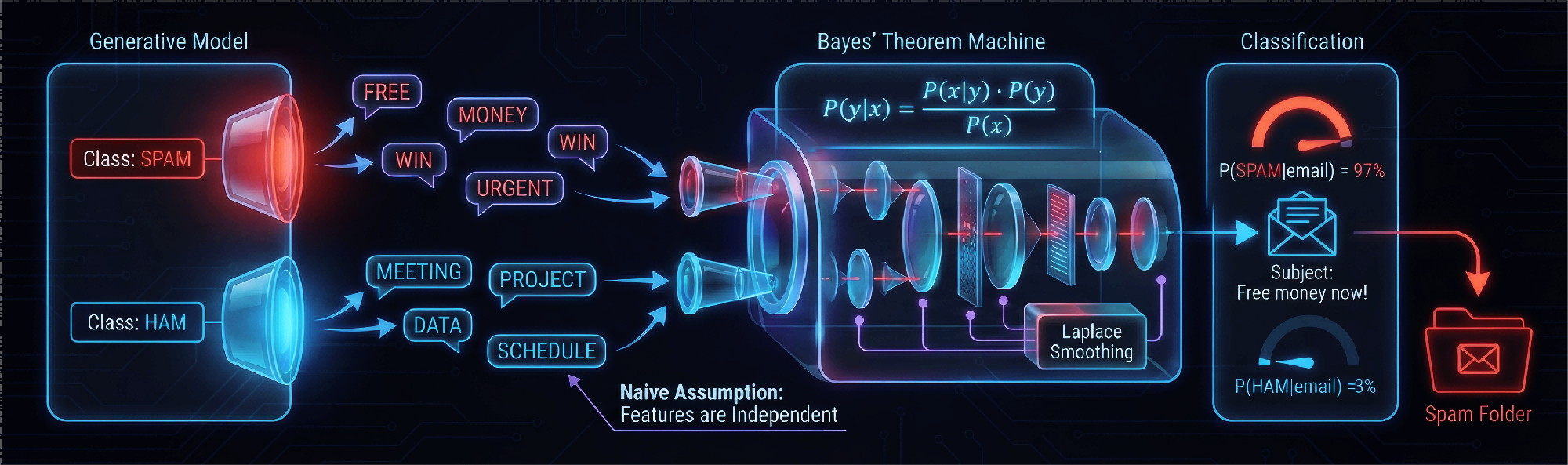

Master Naive Bayes classification: understand generative vs discriminative models, apply Bayes' theorem with the naive independence assumption, and build powerful text classifiers for spam detection and beyond.

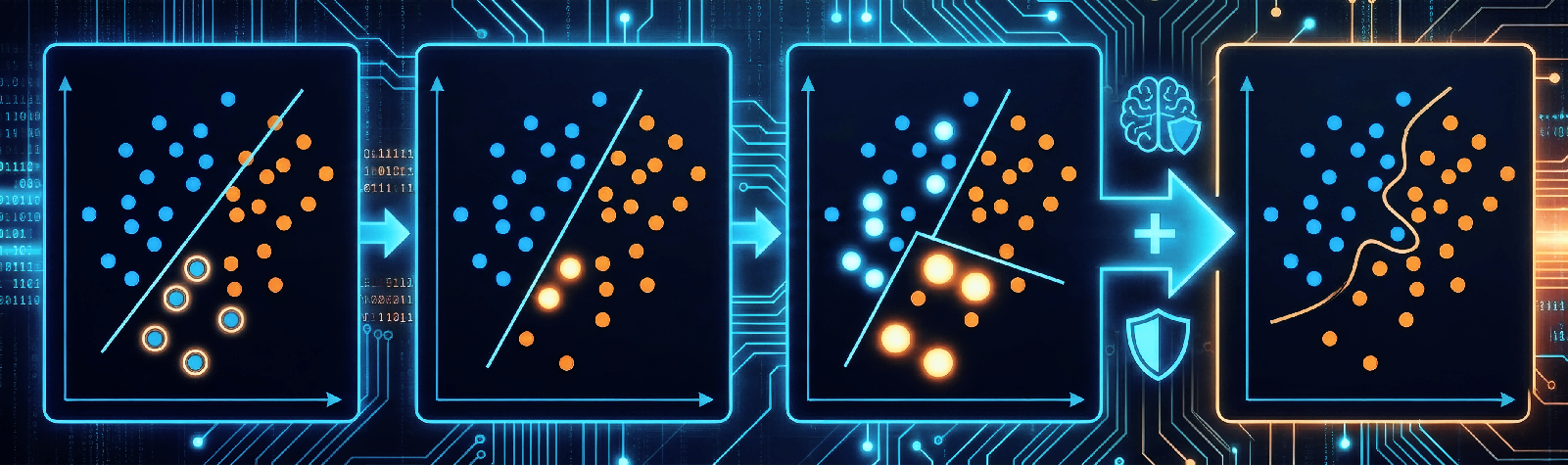

Go beyond linear SVM: master the kernel trick for nonlinear boundaries, understand RBF and polynomial kernels, and learn soft-margin SVM with the C parameter for handling real-world noisy data.

Master hard-margin SVM from scratch: learn why maximizing margins improves generalization, derive the optimization problem step-by-step, and understand the powerful dual formulation with Lagrangian and KKT conditions.

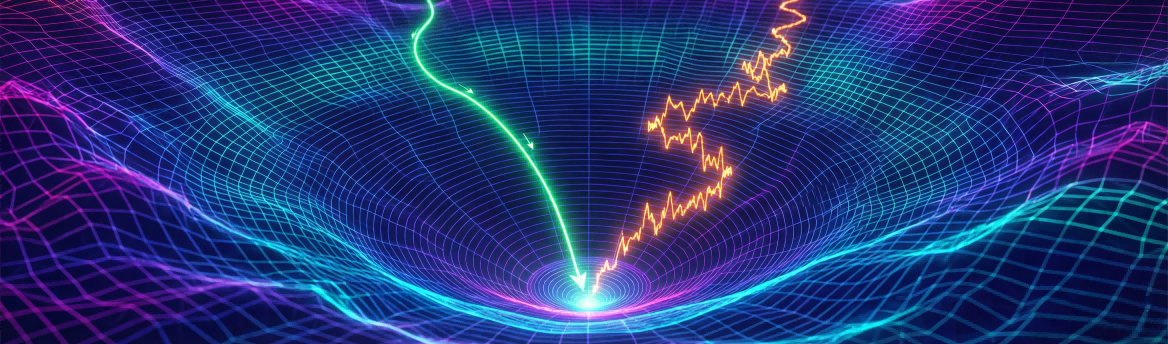

How gradient descent finds optimal parameters by iteratively descending the loss landscape. Covers learning rate tuning, Batch GD vs SGD vs Mini-batch, and advanced optimizers like Adam.

Master the simplest yet powerful classification algorithm: understand distance metrics, the curse of dimensionality, optimal K selection, and implement KNN from scratch with stunning decision boundary visualizations.

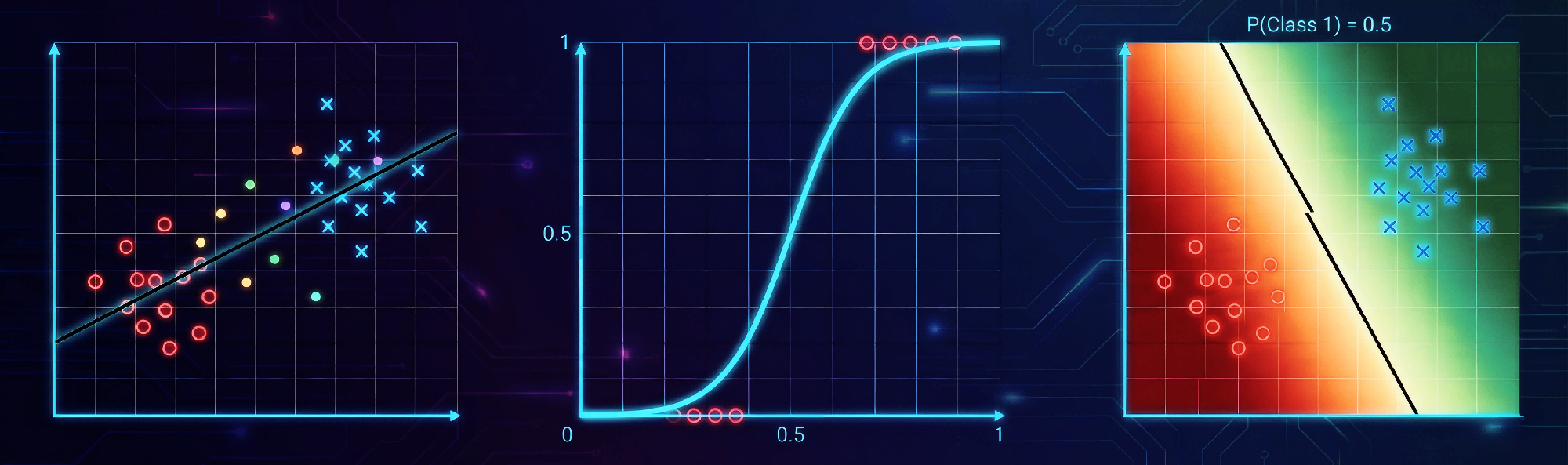

Learn why linear regression fails for classification and how the sigmoid function transforms predictions into probabilities. Includes cross-entropy loss derivation, gradient descent implementation from scratch, and multi-class strategies.