ML-07: Nonlinear Regression and Regularization

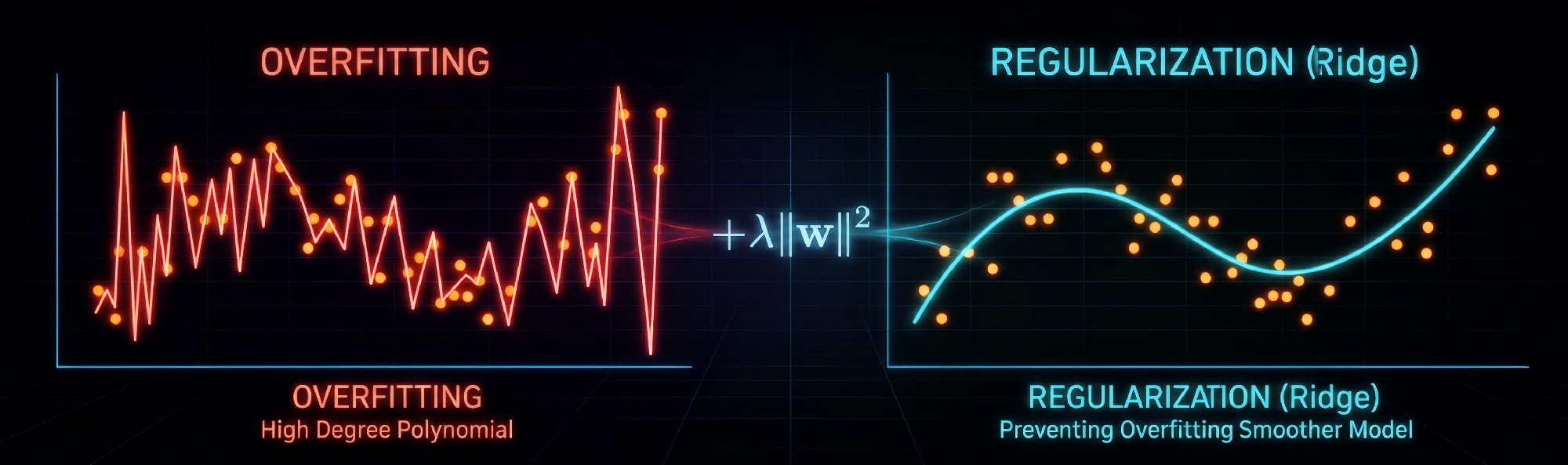

From straight lines to curves: master polynomial features, understand the bias-variance tradeoff, and tame overfitting with Ridge regularization and cross-validation.

From straight lines to curves: master polynomial features, understand the bias-variance tradeoff, and tame overfitting with Ridge regularization and cross-validation.

A deep dive into linear regression: understand why squared error works, derive the normal equation step-by-step, explore the geometric intuition behind projections, and implement from scratch.

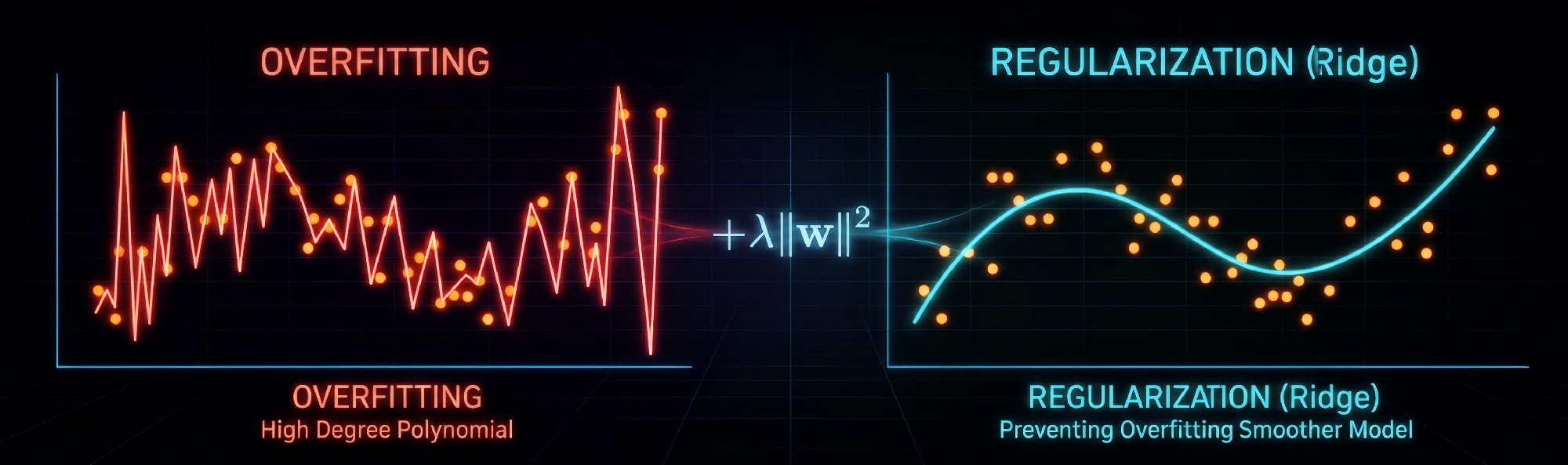

Why do simple models miss patterns while complex ones memorize noise? Master the bias-variance tradeoff to build models that generalize.

Why does a model trained on limited data work on new examples? Explore the theoretical guarantees behind ML: generalization bounds, VC dimension, and PAC learning.

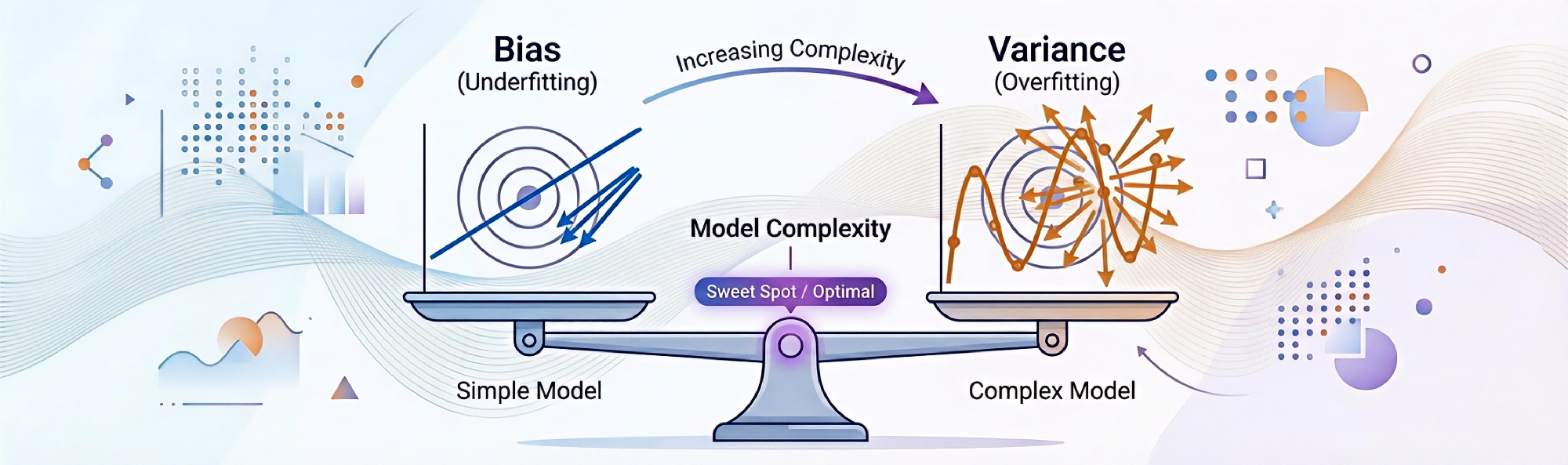

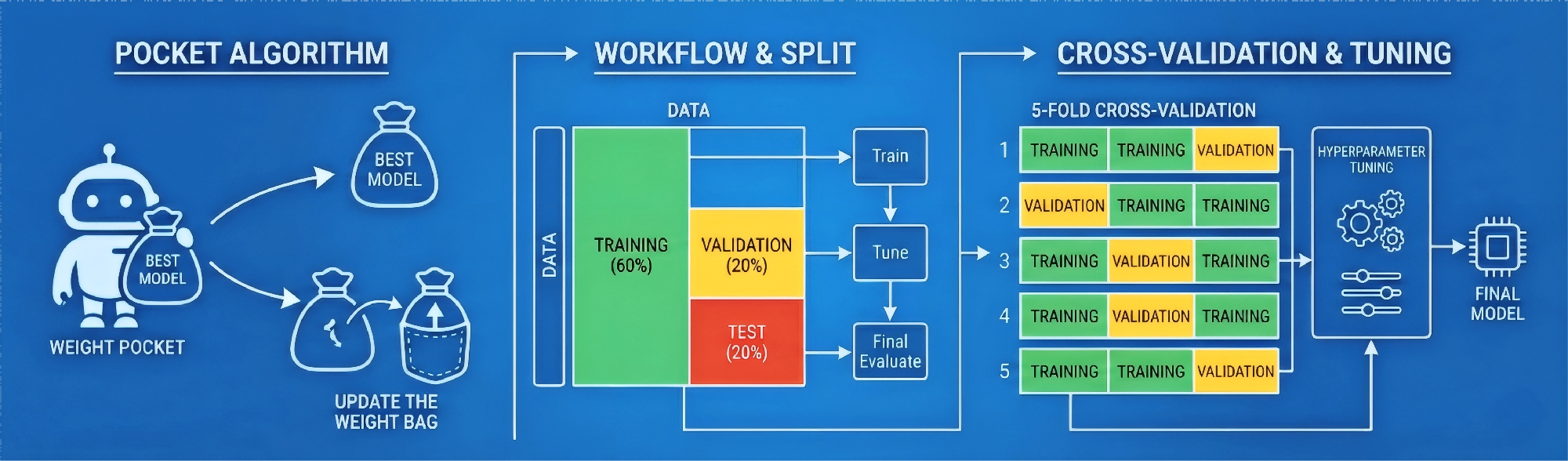

Mastering the pocket algorithm for non-separable data, understanding parameters vs hyperparameters, and the complete ML training workflow.

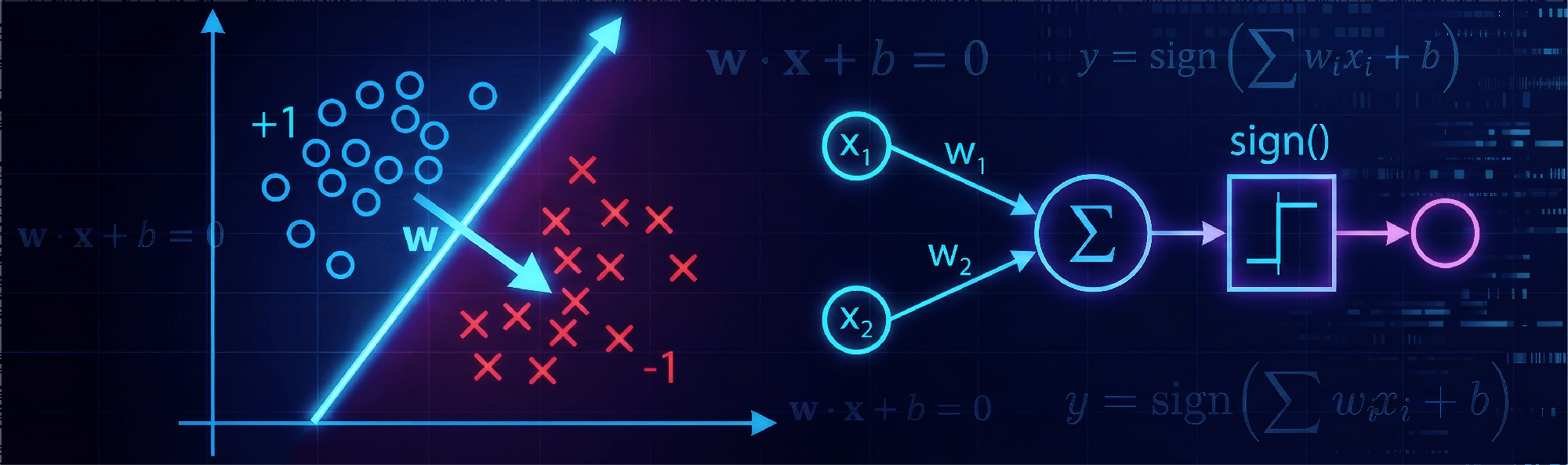

Dive into the perceptron — the building block of neural networks. Learn how a simple linear classifier draws decision boundaries, and build one from scratch.

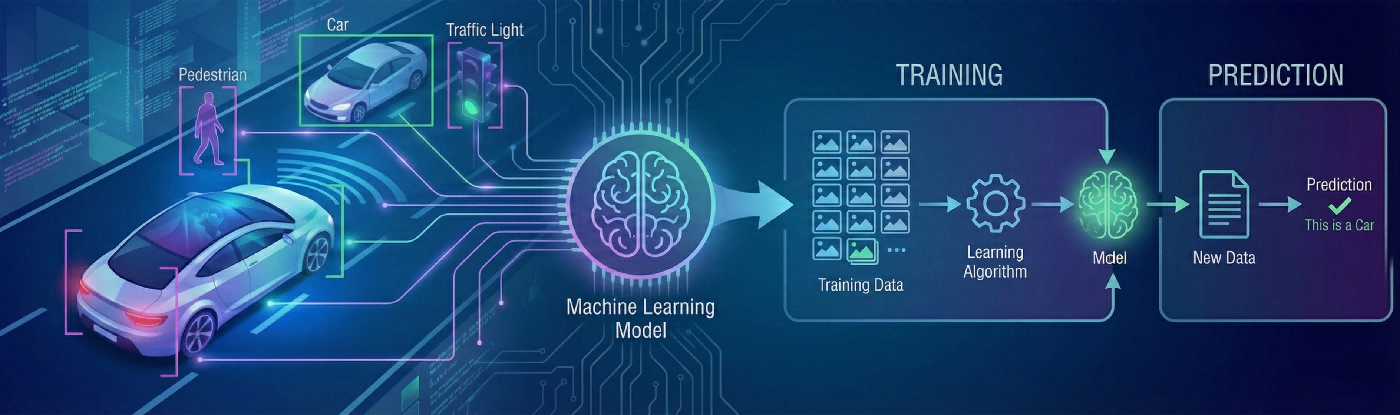

Understanding what machine learning is through the self-driving car example, mastering the core concepts of training and prediction.

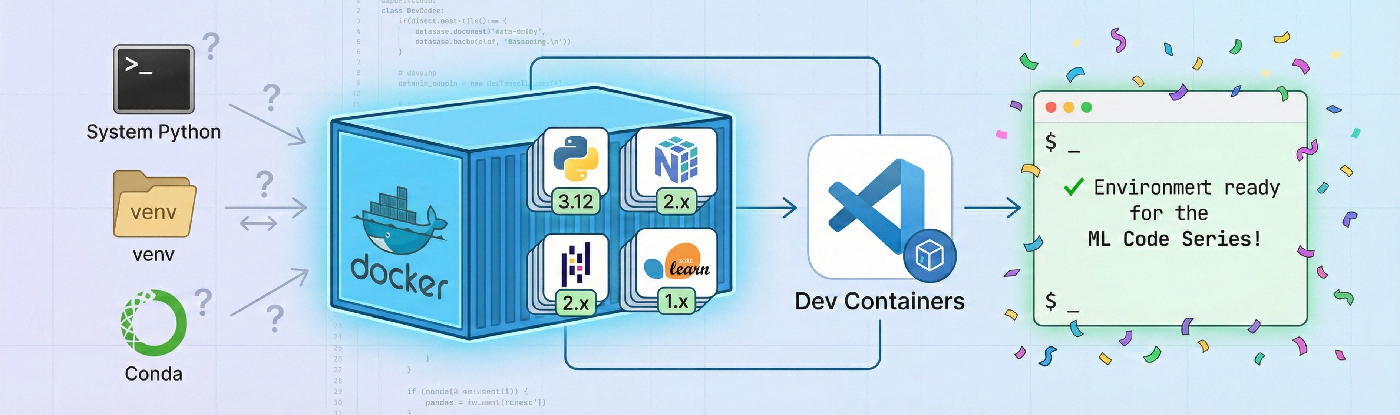

Compare Python environment options and set up a reproducible ML environment using Docker for this blog series. We examine System Python, venv, Conda, and Docker, recommending Docker + VSCode Dev Containers for guaranteed reproducibility.

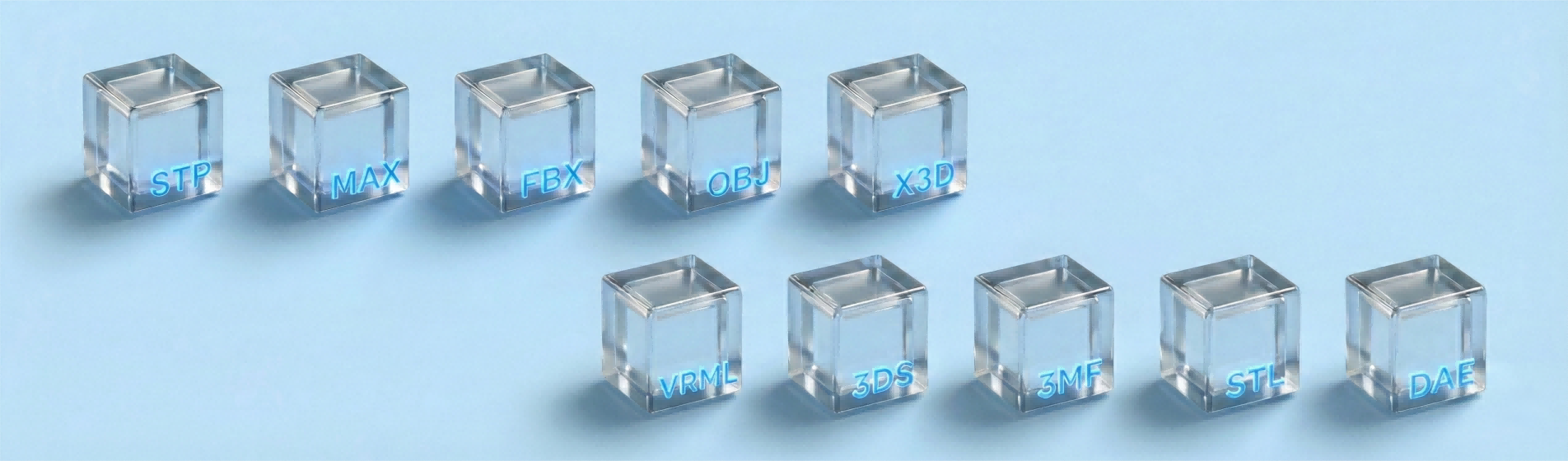

STL vs STEP vs OBJ? A complete classification of 3D file formats for CAD, 3D printing, and FEA. Learn why mesh conversion is difficult and how to bridge the gap between design and simulation.

Stop guessing and start matching. A visual, structural guide to mastering Regular Expressions using 3 foundational concepts. Transform complex 'magic spells' into readable building blocks.