UML-07: t-SNE and UMAP for Visualization

Learning Objectives

After reading this post, you will be able to:

- Understand t-SNE’s approach to preserving local structure

- Use UMAP for faster, more scalable visualizations

- Know the key parameters (perplexity, n_neighbors) and their effects

- Choose between PCA, t-SNE, and UMAP for your visualization needs

Theory

The Intuition: The Origami Master

Imagine your data is a piece of paper with a map drawn on it.

- The Manifold: Now crumple that paper into a tight ball. This is your High-Dimensional data. The points that were originally far apart might now be touching in 3D space.

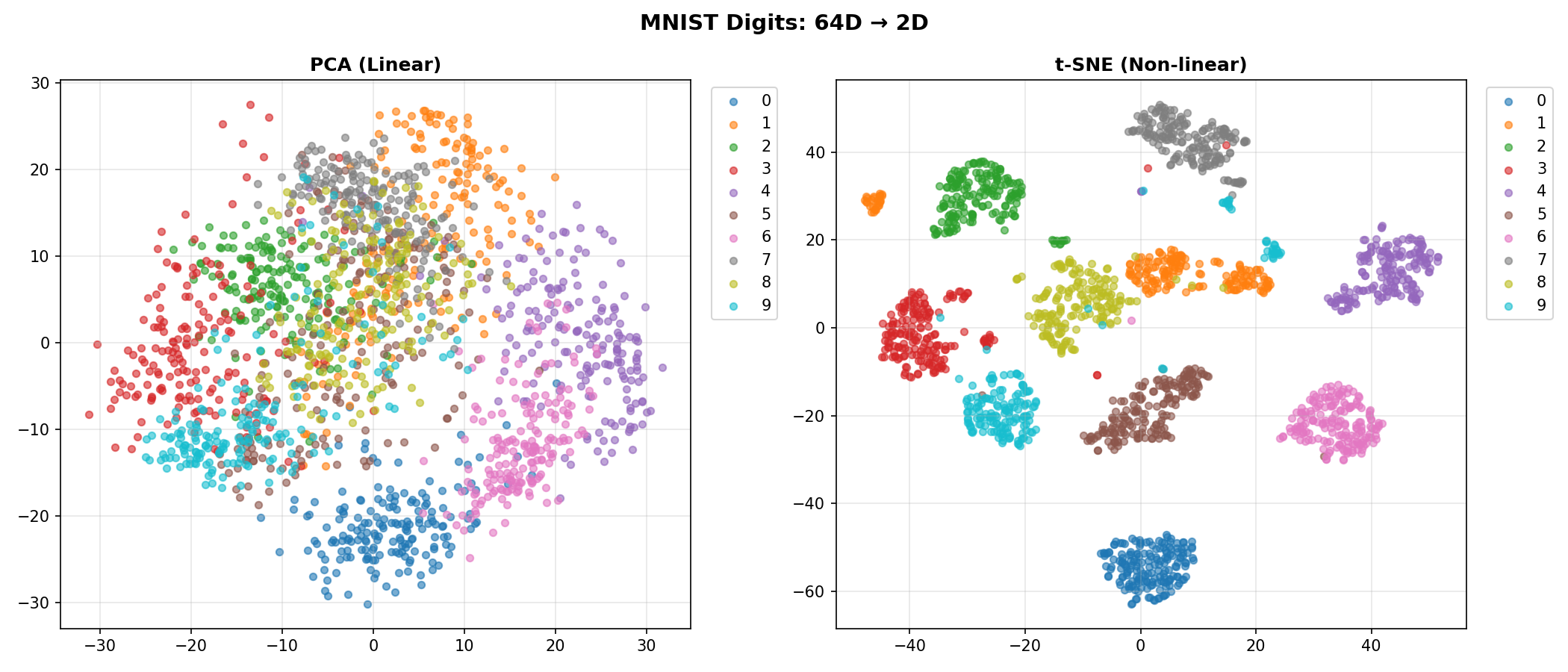

- PCA (The Hammer): PCA tries to simplify this 3D ball by smashing it flat with a hammer. It destroys the original map structure.

- t-SNE / UMAP (The Unfolder): These algorithms are like Origami Masters. They carefully unfold the crumpled ball, smoothing it out to reveal the original 2D map.

Manifold Learning is the art of unfolding this structure.

The Problem: Distance is a Lie

In the crumpled paper ball, point A (top of a fold) might physically touch point B (bottom of a fold).

- Euclidean Distance (Straight line): Says they are neighbors (Distance = 0).

- Geodesic Distance (Along the paper): Walking along the surface, they are actually very far apart!

Key Insight: PCA uses the straight-line distance, which is why it gets confused by the fold. t-SNE and UMAP try to respect the “walking distance” along the paper surface.

t-SNE: The Social Event Planner

Think of t-SNE as trying to recreate a cocktail party seating plan.

- High-D Space (The Party): People are mingling freely in a large room.

- Everyone picks their “Best Friends” (Perplexity = 30 neighbors).

- You are very close to your clique.

- Low-D Space (The Seating Chart): You have to seat everyone at a small 2D table.

- The Goal: If Alice and Bob were standing together at the party (High probability), they MUST sit together at the table.

- The Constraint: There isn’t enough room! You have to push non-friends far away to make space for friends to be close.

- KL Divergence (The Stress): The algorithm measures how “unhappy” everyone is with their seats. It shuffles people around until the “social stress” is minimized.

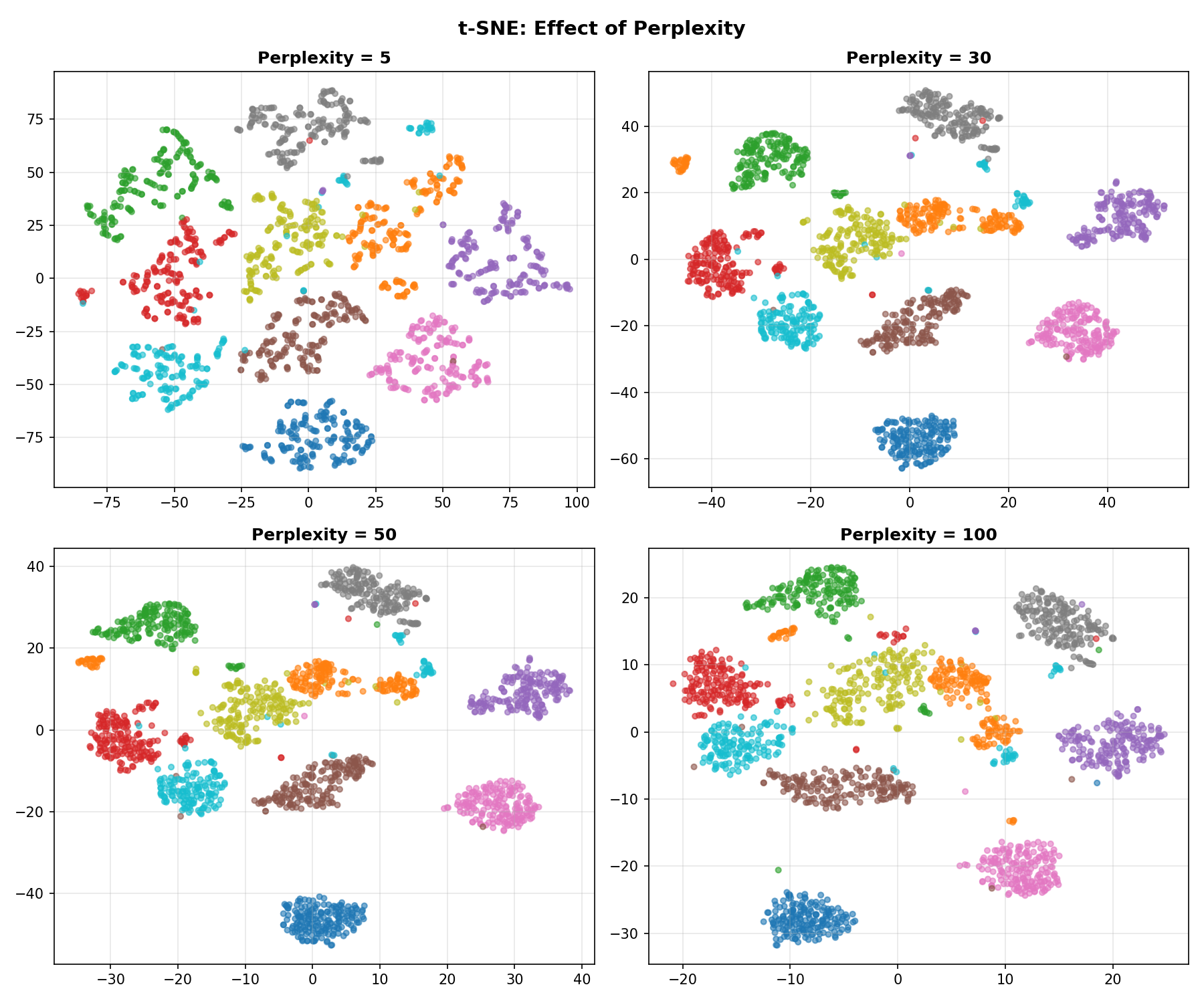

Key Parameter: Perplexity (The Thread Length)

Think of Perplexity as the length of the thread you use to connect points.

- Low (5-10): Short threads. You only connect to your immediate neighbors. The map breaks into many small, unconnected islands.

- High (50+): Long threads. You connect to points far away. Everything gets pulled into one big blob.

- Medium (30): Just right.

UMAP: The Fast Sketch Artist

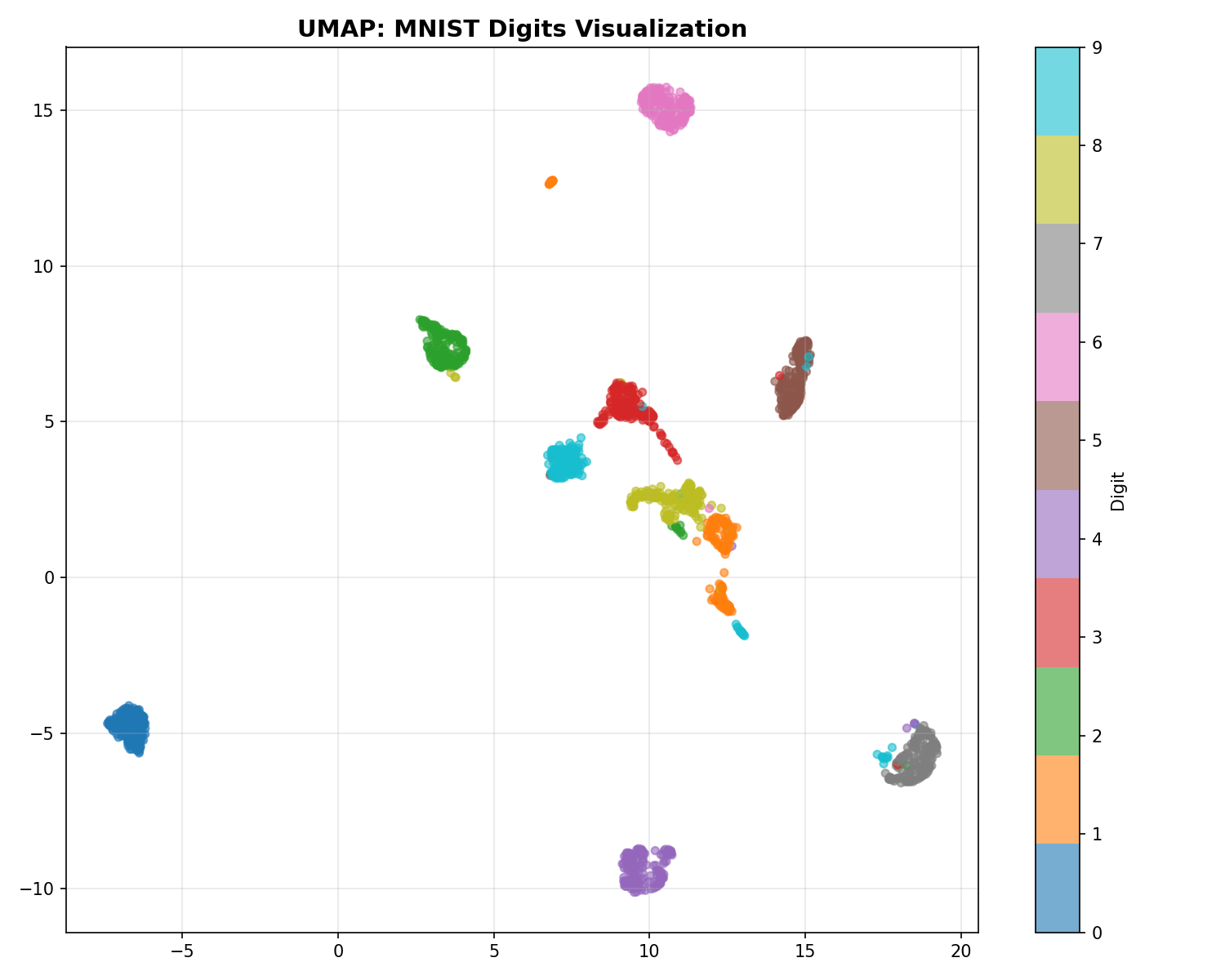

UMAP is a newer algorithm. Why is it so popular?

- Speed: t-SNE calculates interactions between every pair of points (slow). UMAP approximates the manifold structure mathematically (topology), avoiding unnecessary calculations. It’s like sketching the shape of the mountain instead of measuring every single rock.

- Global Structure: Because of its mathematical foundation, UMAP is better at keeping far-away clusters in roughly the correct relative positions (e.g., “Continent A is north of Continent B”), whereas t-SNE might put them anywhere.

| Aspect | t-SNE | UMAP |

|---|---|---|

| Speed | Slow | Fast |

| Global structure | Poor | Better |

| Scalability | Thousands | Millions |

| Reproducibility | Random (no random_state in some versions) | Reproducible |

| Parameters | Perplexity | n_neighbors, min_dist |

Code Practice

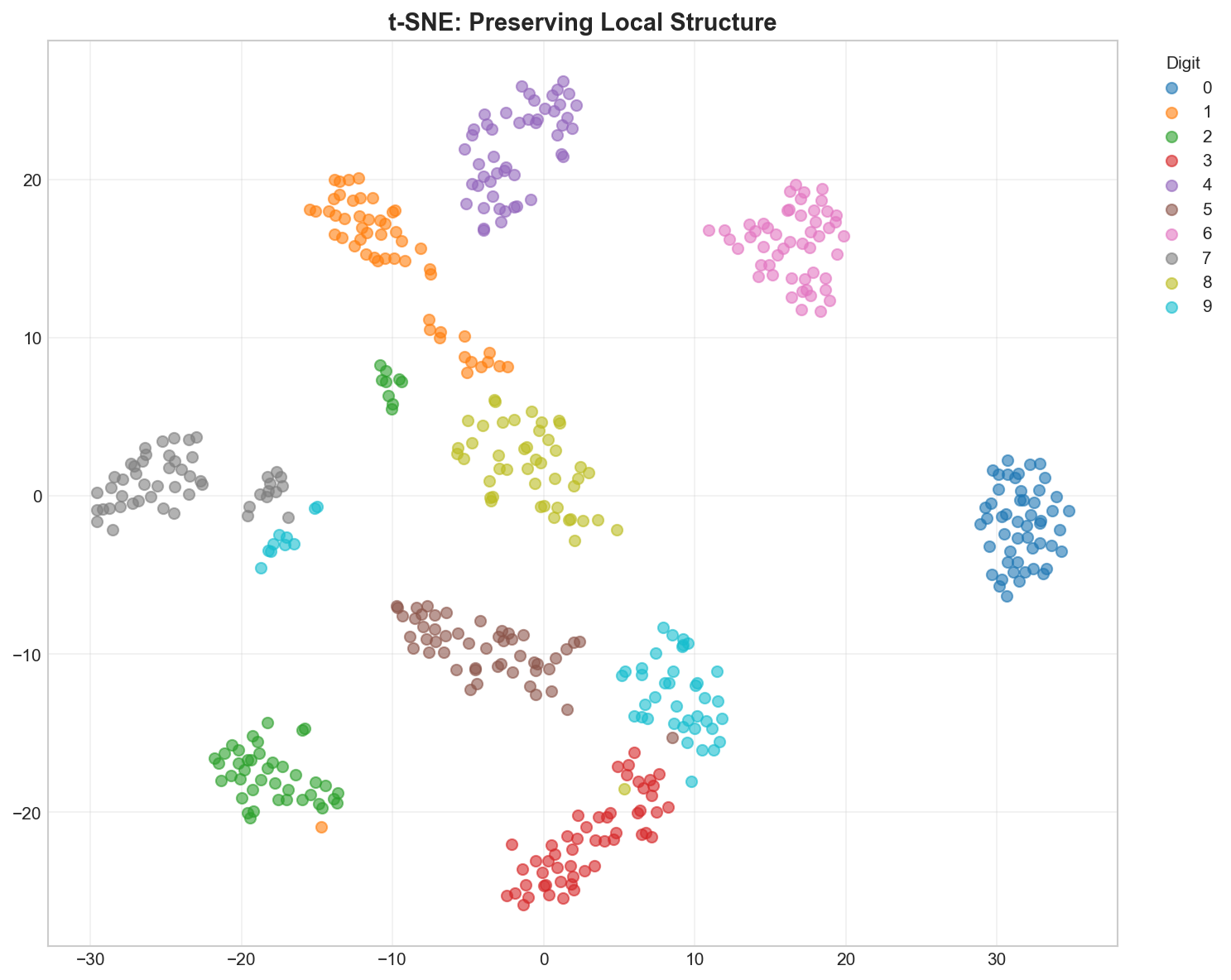

t-SNE on MNIST Digits

We’ll use the classic MNIST dataset (handwritten digits).

- The Data: 1,797 images of digits (0-9).

- The Dimensions: Each image is 8x8 pixels = 64 dimensions.

- The Goal: Can we unfold this 64-dimensional data into 2 dimensions so that all the “0"s are in one pile and all the “1"s in another?

🐍 Python

| |

Output:

Comparing PCA vs t-SNE

🐍 Python

| |

UMAP Visualization

🐍 Python

| |

Effect of Perplexity

🐍 Python

| |

Deep Dive

Common Pitfalls

t-SNE / UMAP interpretation warnings:

- Cluster sizes don’t matter — t-SNE/UMAP distort densities

- Distances between clusters don’t matter — only local structure is preserved

- Different runs give different results — always set random_state

- Don’t use for downstream ML — embeddings are for visualization only

When to Use Each Method

| Goal | Method |

|---|---|

| Quick exploration | PCA |

| Publication-quality visualization | t-SNE or UMAP |

| Large datasets (100K+) | UMAP |

| Preserve global structure | UMAP |

| Classic visualization | t-SNE |

Frequently Asked Questions

Q1: Can I use t-SNE/UMAP embeddings for clustering?

You can, but with caution:

- Cluster on original data, visualize with t-SNE/UMAP

- Or cluster on UMAP (but be aware of distortions)

Q2: My t-SNE looks different every time — why?

t-SNE is stochastic. Always set random_state for reproducibility.

Q3: How do I choose between t-SNE and UMAP?

- t-SNE: Classic choice, widely used in publications

- UMAP: Faster, better global structure, more parameters to tune

Summary

| Concept | Key Points |

|---|---|

| t-SNE | Non-linear, preserves local structure, KL divergence |

| UMAP | Faster, better global structure, topology-based |

| Perplexity | t-SNE neighborhood size (5-50) |

| n_neighbors | UMAP local connectivity (5-50) |

| Use case | Visualization only, not downstream ML |

References

- van der Maaten, L. & Hinton, G. (2008). “Visualizing Data using t-SNE”

- McInnes, L. et al. (2018). “UMAP: Uniform Manifold Approximation and Projection”

- sklearn t-SNE

- UMAP Documentation