Local LLM Deployment Toolkit: Implementing Ollama with Open-Source Clients

Summary

This post demonstrates end-to-end implementation of local large language models using Ollama framework, featuring three open-source clients: Page Assist browser extension for web integration, Cherry Studio for VS Code development environments, and AnythingLLM desktop application for document-driven AI workflows. The tutorial covers installation protocols, API configuration best practices, and performance optimization techniques for Windows-based LLM deployments.

Quick Comparison

| Client | Platform | Best For |

|---|---|---|

| Page Assist | Browser Extension | Quick web-based chat |

| Cherry Studio | Desktop App | Multi-provider management |

| AnythingLLM | Desktop App | Document-based RAG |

| Continue | VS Code Extension | AI-assisted coding |

Page Assist (in browsers)

- Open Source browser extension

- Official website

- Github repository

- Documentation

- Page Assist is an open-source browser extension that provides a sidebar and web UI for your local AI model. It allows you to interact with your model from any webpage.

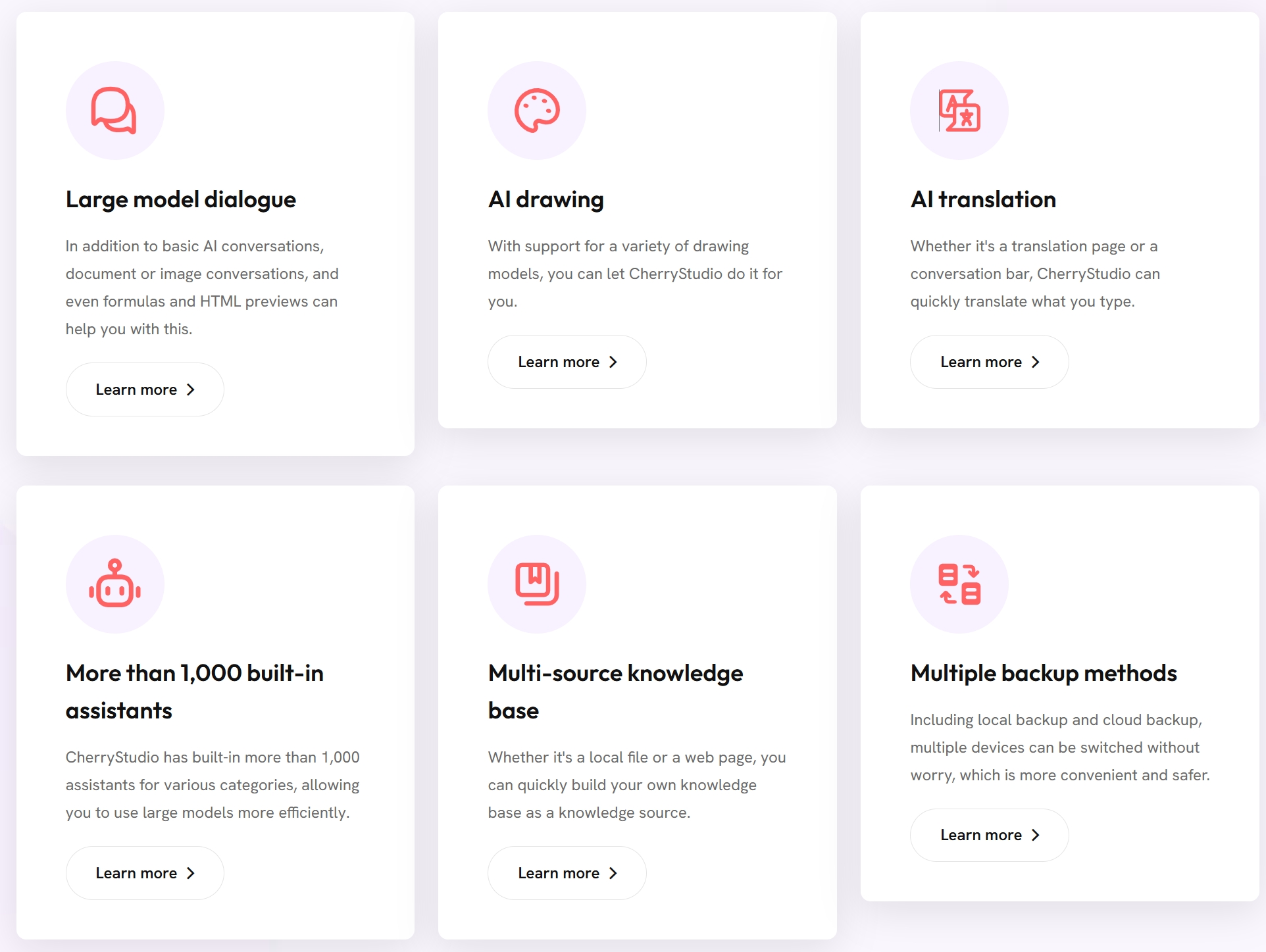

Cherry Studio (desktop)

- Open Source desktop client

- Official website

- Github repository

- Documentation

- Cherry Studio is a desktop client that supports for multiple LLM providers, available on Windows, Mac and Linux.

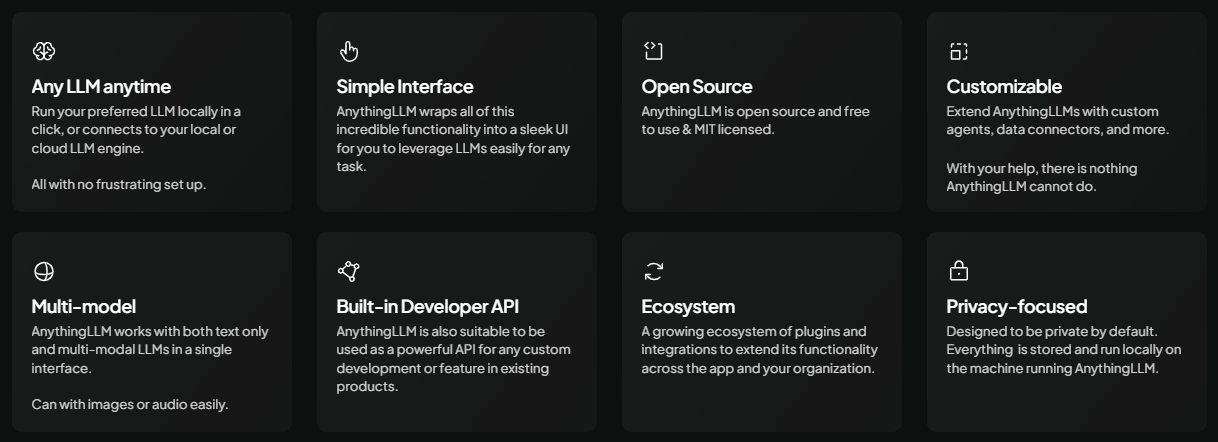

AnythingLLM (desktop)

- Open Source desktop client

- Official website

- Github repository

- Documentation

- AnythingLLM is the AI application you’ve been seeking. Use any LLM to chat with your documents, enhance your productivity, and run the latest state-of-the-art LLMs completely privately with no technical setup.

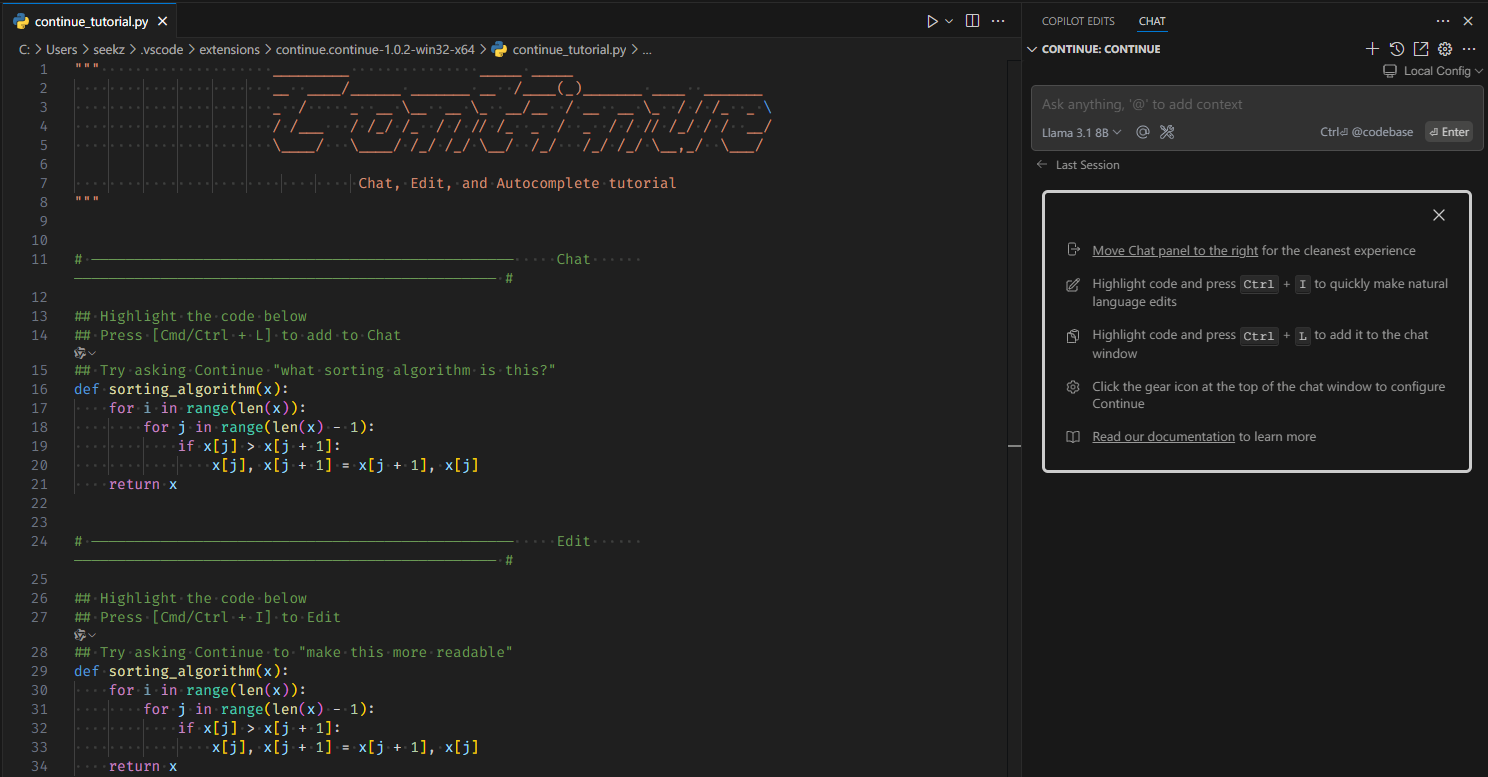

Cherry Studio (in VSCode)

- Open Source

- Official website

- Github repository

- Documentation

- Continue enables to developers to create, share, and use custom AI code assistants with our open-source VS Code and JetBrains extensions and hub of models, rules, prompts, docs, and other building blo cks.

Installation tips

- Launch Visual Studio Code and locate the Continue extension icon in the activity bar

- Click the ‘remain Local’ tab and follow the guided workflow to download the default Ollama models

- Completed models will display a green checkmark badge as a readiness indicator

- Click the ‘Connect’ button to start to use the functions.